Previous Posts

My poor colleague was pulling her hair out in frustration today. Here's what happened: She was trying to import an Excel spreadsheet into SAS, and it didn’t work. Here's what to do.

The expression “can’t see the forest for the trees” often comes to mind when reviewing a statistical analysis. We get so involved in reporting “statistically significant” and p-values that we fail to explore the grand picture of our results. It’s understandable that this can happen. We have a hypothesis to test. We go through a […]

Mixed models are hard. They’re abstract, they’re a little weird, and there is not a common vocabulary or notation for them. But they’re also extremely important to understand because many data sets require their use. Repeated measures ANOVA has too many limitations. It just doesn’t cut it any more. One of the most difficult parts […]

There is a bit of art and experience to model building. You need to build a model to answer your research question but how do you build a statistical model when there are no instructions in the box? Should you start with all your predictors or look at each one separately? Do you always take […]

When a model has a binary outcome, one common effect size is a risk ratio. As a reminder, a risk ratio is simply a ratio of two probabilities. (The risk ratio is also called relative risk.) Recently I have had a few questions about risk ratios less than one. A predictor variable with a risk ratio of less than one is often labeled a “protective factor” (at least in Epidemiology). This can be confusing because in our typical understanding of those terms, it makes no sense that a risk be protective. So how can a RISK be protective?

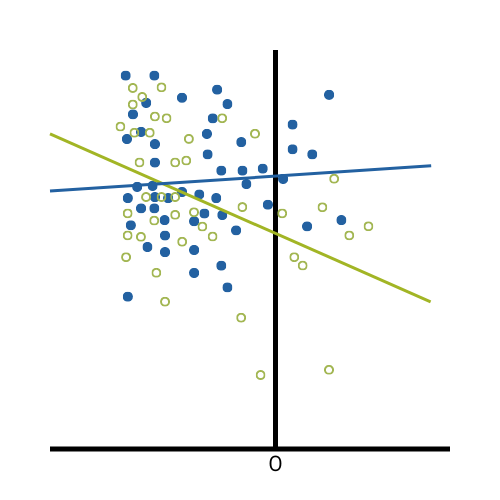

In a recent article, we reviewed the impact of removing the intercept from a regression model when the predictor variable is categorical. This month we’re going to talk about removing the intercept when the predictor variable is continuous. Spoiler alert: You should never remove the intercept when a predictor variable is continuous. Here’s why.

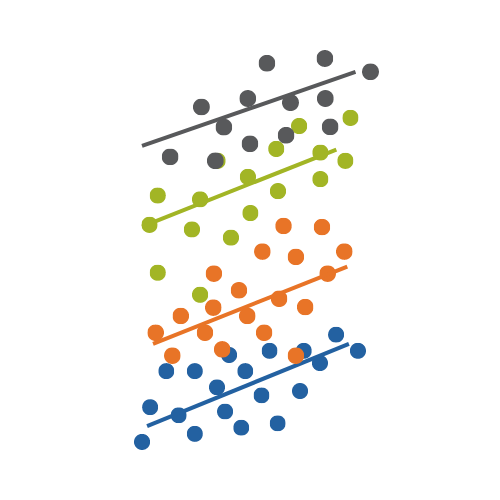

by Christos Giannoulis, PhD Attributes are often measured using multiple variables with different upper and lower limits. For example, we may have five measures of political orientation, each with a different range of values. Each variable is measured in a different way. The measures have a different number of categories and the low and high […]

Ratios are everywhere in statistics—coefficient of variation, hazard ratio, odds ratio, the list goes on. Join Elaine Eisenbeisz as she presents an overview of the how and why of various ratios we use often in statistical practice.

Can we ignore the fact that a variable is bounded and just run our analysis as if the data wasn’t bounded?

Q16: The different reference group definitions (between R and SPSS) seem to give different significance values. Is that because they are testing different hypotheses? (e.g. "Is group 1 different from the reference group?") A: Yes. Because they're using different reference groups, we have different hypothesis tests and therefore different p-values.

stat skill-building compass

stat skill-building compass