Every statistical model and hypothesis test has assumptions.

And yes, if you’re going to use a statistical test, you need to check whether those assumptions are reasonable to whatever extent you can.

Some assumptions are easier to check than others. Some are so obviously reasonable that you don’t need to do much to check them most of the time. And some have no good way of being checked directly, so you have to use situational clues.

There are so many nuances with assumptions as well: depending on a lot of the details of your particular study and data set, violations of some assumptions may be more or less serious. It depends on a lot of details: sample sizes, imbalance in the data across groups, whether the study is exploratory or confirmatory, etc.

And here’s the kicker: the simple rules your stats professor told you to use to test assumptions were absolutely sufficient when you were learning about tests and assumptions. But now that you’re doing real data analysis? It’s time to dig into the details.

So here are some guidelines about checking assumptions that should help you make decisions about which to check and when to conclude that you’ve checked enough.

Before you begin

- make sure you understand what the assumptions are and what they mean. There is a lot of misinformation and vague information out there about assumptions.

- Don’t forget that when assumptions violations are serious, it will call into question all your results. This really is important.

Don’t rely on a single statistical test to decide if another test’s assumptions have been met.

There are many tests, like Levene’s test for homogeneity of variance, the Kolmogorov-Smirnov test for normality, the Bartlett’s test for sphericity, whose main usage is to test the assumptions of another test.

There are many tests, like Levene’s test for homogeneity of variance, the Kolmogorov-Smirnov test for normality, the Bartlett’s test for sphericity, whose main usage is to test the assumptions of another test.

These tests probably have other uses, but this is how I’ve generally seen them used.

These tests provide useful information about whether an assumption is being met. But they’re just one piece of information that you should use to decide if the assumption is reasonable.

Because, nuances.

Let’s use that same example of Levene’s test of homogeneity of variance. It’s often used in ANOVA as a sole decision criterion. And software makes it so easy.

But it’s too simple. Don’t do it.

- It relies too much on p-values, and therefore, sample sizes. If the sample size is large, Levene’s will have a smaller p-value than if the sample size is small, given the same variances. So it’s very likely that you’re overstating a problem with the assumption in large samples and understating it in small samples. You can’t ignore the actual size difference in the variances when making this decision. So sure, look at the p-value, but also look at the actual variances and how much bigger some are than others. (In other words, actually look at the effect size, not just the p-value).

- The ANOVA is generally considered robust to violations of this assumption when sample sizes across groups are equal. So even if Levene’s is significant, moderately different variances may not be a problem in balanced data sets. Keppel (1992) suggests that a good rule of thumb is that if sample sizes are equal, robustness should hold until the largest variance is more than 9 times the smallest variance.

- This robustness goes away the more unbalanced the samples are. So you need to use judgment here, taking into account both the imbalance and the actual difference in variances.

Gather Evidence Instead

Use the results of the Levene’s test as one piece of evidence you’ll use to make a decision.

In addition to that and the ratio rule of thumb, the other pieces of info could potentially include:

- A graph of your data. Is there an obvious difference in spread across groups?

- A different test of the same assumption, if it exists. (Hartley’s F-max is another one for equal variances). See if the results match.

Consider each piece of evidence within the wider data context.

Now make a judgment. Take into account the sample sizes when interpreting p-values from any tests.

I know it’s not comfortable to make judgments based on uncertain information. Experience helps here.

But remember, in data analysis, it’s impossible not to.

Be transparent in what you did and on what evidence you based your decision.

If you need help figuring out the best way to test assumptions or to decide if yours are met, ask one of our statistics mentors in Statistically Speaking. This is just the kind of question we can help you with.

Hi,

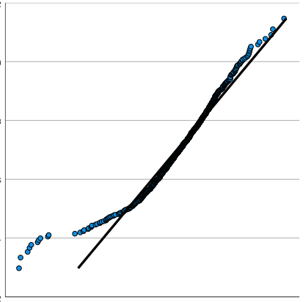

I have a large dataset with two groups, each with 19,735 values. The data looks quite homogenous, but all tests for homogeneity turn up very significant p-values when I include all the data. But if I sub-sample the data to extract just 1000 rows of the data (I didn’t even take care to ensure the number of sub-samples between the groups was consistent) the homogeneity test turns up a non-significant p-value. Essentially this shows that the significant p-value I get is in large-part my large sample sizes.

It does seem silly, with large sample sizes tests for normality and homogeneity seem to be almost useless, it feels almost like being punished for having large sample sizes! I’ll probably just forget the hassle and use a non-parametric test as I really don’t know enough about statistics to make a good judgement as to whether the amount of heterogeneity I have is too much. Luckily I guess my large sample size will make the power of any test I do quite high.

Hi Sam,

This is a great example!

Thank you for this insightful post.

I had a question on a data project I was recently working on. The requirement is to compare the performance between two samples, where one sample has 366 records and the other has 592 records. The sample variances are 1.202 and 1.304 respectively. Planning to do a t-hypothesis test for means for this. However, to establish homogeneity of variance using Levene test, are the different sample sizes alright or are they not appropriate for the Levene test.

Thank you for your advice.

-Rick

Hi Rick,

I wouldn’t use Levene test here. It’s a perfect example of where it’s likely to be significant even though the variances are quite similar, just due to your large sample size.