Effect size statistics are all the rage these days.

Journal editors are demanding them. Committees won’t pass dissertations without them.

But the reason to compute them is not just that someone wants them — they can truly help you understand your data analysis.

What Is an Effect Size Statistic?

And yes, these definitely qualify. But the concept of an effect size statistic is actually much broader. Here’s a description from a nice article on effect size statistics:

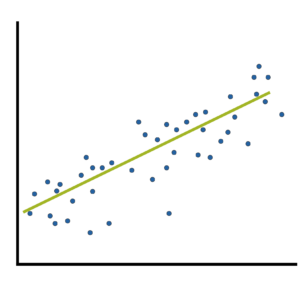

If you think about it, many familiar statistics fit this description. Regression coefficients give information about the magnitude and direction of the relationship between two variables. So do correlation coefficients. (more…)

One of the most difficult steps in calculating sample size estimates is determining the smallest scientifically meaningful effect size.

Here’s the logic:

The power of every significance test is based on four things: the alpha level, the size of the effect, the amount of variation in the data, and the sample size.

You will measure the effect size in question differently, depending on which statistical test you’re performing. It could be a mean difference, a difference in proportions, a correlation, regression slope, odds ratio, etc.

When you’re planning a study and estimating the sample size needed for (more…)

Nearly all granting agencies require an estimate of an adequate sample size to detect the effects hypothesized in the study. But all studies are well served by estimates of sample size, as it can save a great deal on resources.

Why? Undersized studies can’t find real results, and oversized studies find even insubstantial ones. Both undersized and oversized studies waste time, energy, and money; the former by using resources without finding results, and the latter by using more resources than necessary. Both expose an unnecessary number of participants to experimental risks.

The trick is (more…)

When many of us hear “Effect Size Statistic,” we immediately think we need one of a few statistics: Eta-squared, Cohen’s d, R-squared.

When many of us hear “Effect Size Statistic,” we immediately think we need one of a few statistics: Eta-squared, Cohen’s d, R-squared.