Odds ratios have a unique part to play in describing the effects of logistic regression models. But that doesn’t mean they’re easy to communicate to an audience who is likely to misinterpret them. So writing up your odds ratios has to be done with care. (more…)

Karen Grace-Martin

Guidelines for writing up three types of odds ratios

Logistic Regression Analysis: Understanding Odds and Probability

Updated 11/22/2021

Probability and odds measure the same thing: the likelihood or propensity or possibility of a specific outcome.

People use the terms odds and probability interchangeably in casual usage, but that is unfortunate. It just creates confusion because they are not equivalent.

How Odds and Probability Differ

They measure the same thing on different scales. Imagine how confusing it would be if people used degrees Celsius and degrees Fahrenheit interchangeably. “It’s going to be 35 degrees today” could really make you dress the wrong way.

In measuring the likelihood of any outcome, we need to know (more…)

Interpreting Regression Coefficients: Changing the scale of predictor variables

One issue that affects how to interpret regression coefficients is the scale of the variables. In linear regression, the scaling of both the response variable Y, and the relevant predictor X, are both important.

In regression models like logistic regression, where the response variable is categorical, and therefore doesn’t have a numerical scale, this only applies to predictor variables, X.

This can be an issue of measurement units–miles vs. kilometers. Or it can be an issue of simply how big “one unit” is. For example, whether one unit of annual income is measured in dollars, thousands of dollars, or millions of dollars.

The good news is you can easily change the scale of variables to make it easier to interpret their regression coefficients. This works as well for functions of regression coefficients, like odds ratios and rate ratios.

All you have to do is create a new variable in your data set (don’t overwrite the individual one in case you make a mistake). This new variable is simply the old one multiplied or divided by some constant. The constant is often a factor of 10, but it doesn’t have to be. Then use the new variable in your model instead of the original one.

Since regression coefficients and odds ratios tell you the effect of a one unit change in the predictor, you should multiply them so that a one unit change in the predictor makes sense.

An Example of Rescaling Predictors

Here is a really simple example that I use in one of my workshops.

Y= first semester college GPA

X1= high school GPA

X2=SAT score

High School and first semester College GPA are both measured on a scale from 0 to 4. If you’re not familiar with this scaling, a 0 means you failed a class. An A (usually the top possible score) is a 4, a B is a 3, a C is a 2, and a D is a 1. So for a grade point average, a one point difference is very big.

If you’re an admissions counselor looking at high school transcripts, there is a big difference between a 3.7 GPA and a 2.7 GPA.

SAT score is on an entirely different scale. It’s a normed scale, so that the minimum is 200, the maximum is 800, and the mean is 500. Scores are in units of 10. You literally cannot receive a score of 622. You can get only 620 or 630.

So a one-point difference is not only tiny, it’s meaningless. Even a 10 point difference in SAT scores is pretty small. But 50 points is meaningful, and 100 points is large.

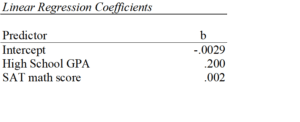

If you leave both predictors on the original scale in a regression that predicts first semester GPA, you get the following results:

Let’s interpret those coefficients.

Let’s interpret those coefficients.

The coefficient for high school GPA here is .20. This says that for each one-unit difference in GPA, we expect, on average, a .2 higher first semester GPA. While a one-unit change in GPA is huge, that’s reasonably meaningful.

The coefficient for SAT math scores is .002. That looks tiny. It says that for each one-unit difference in SAT math score, we expect, on average, a .002 higher first semester GPA. But a one-unit difference in SAT score is too small to interpret. It’s too small to be meaningful.

So we can change the scaling of our SAT score predictor to be in 10-point differences. Or in 100 point differences. Chose the scale for which one unit is meaningful.

Changing the scale by mulitplying the coefficient

In a linear model, you can simply multiply the coefficient by 10 to reflect a 10-point difference. That’s a coefficient of .02. So for each 10 point difference in math SAT score we expect, on average, a .02 higher first semester GPA.

Or we could multiply the coefficient by 50 to reflect a 50-point difference. That’s a coefficient of .10. So for each 50 point difference in math SAT score we expect, on average, a .1 higher first semester GPA.

When it’s easier to just change the variables

Multiplying the coefficient is easier than rescaling the original variable if you only have one or two of these and you’re using linear regression.

It doesn’t work once you’ve done any sort of back-transformation in generalized linear models. So you can’t just multiply the odds ratio or the incidence rate ratio by 10 or 50. Both of these are created by exponentiating the regression coefficient. Because of the order of operations in algebra, You have to first multiply the coefficient by the constant, and then re-expontiate.

Likewise, if you are using this predictor in more than one linear regression model, it’s much simpler to rescale the variable in the first place. Simply divide that SAT score by 10 or 50 and the coefficient will .02 or .10, respectively.

Updated 12/2/2021

Multiple Imputation in a Nutshell

Imputation as an approach to missing data has been around for decades.

You probably learned about mean imputation in methods classes, only to be told to never do it for a variety of very good reasons. Mean imputation, in which each missing value is replaced, or imputed, with the mean of observed values of that variable, is not the only type of imputation, however. (more…)

Odds Ratio: Standardized or Unstandardized Effect Size?

Effect size statistics are extremely important for interpreting statistical results. The emphasis on reporting them has been a great development over the past decade. (more…)

Why report estimated marginal means?

Updated 8/18/2021

I recently was asked whether to report means from descriptive statistics or from the Estimated Marginal Means with SPSS GLM.

The short answer: Report the Estimated Marginal Means (almost always).

To understand why and the rare case it doesn’t matter, let’s dig in a bit with a longer answer.

First, a marginal mean is the mean response for each category of a factor, adjusted for any other variables in the model (more on this later).

Just about any time you include a factor in a linear model, you’ll want to report the mean for each group. The F test of the model in the ANOVA table will give you a p-value for the null hypothesis that those means are equal. And that’s important.

But you need to see the means and their standard errors to interpret the results. The difference in those means is what measures the effect of the factor. While that difference can also appear in the regression coefficients, looking at the means themselves give you a context and makes interpretation more straightforward. This is especially true if you have interactions in the model.

Some basic info about marginal means

- In SPSS menus, they are in the Options button and in SPSS’s syntax they’re EMMEANS.

- These are called LSMeans in SAS, margins in Stata, and emmeans in R’s emmeans package.

- Although I’m talking about them in the context of linear models, all the software has them in other types of models, including linear mixed models, generalized linear models, and generalized linear mixed models.

- They are also called predicted means, and model-based means. There are probably a few other names for them, because that’s what happens in statistics.

When marginal means are the same as observed means

Let’s consider a few different models. In all of these, our factor of interest, X, is a categorical predictor for which we’re calculating Estimated Marginal Means. We’ll call it the Independent Variable (IV).

Model 1: No other predictors

If you have just a single factor in the model (a one-way anova), marginal means and observed means will be the same.

Observed means are what you would get if you simply calculated the mean of Y for each group of X.

Model 2: Other categorical predictors, and all are balanced

Likewise, if you have other factors in the model, if all those factors are balanced, the estimated marginal means will be the same as the observed means you got from descriptive statistics.

Model 3: Other categorical predictors, unbalanced

Now things change. The marginal mean for our IV is different from the observed mean. It’s the mean for each group of the IV, averaged across the groups for the other factor.

When you’re observing the category an individual is in, you will pretty much never get balanced data. Even when you’re doing random assignment, balanced groups can be hard to achieve.

In this situation, the observed means will be different than the marginal means. So report the marginal means. They better reflect the main effect of your IV—the effect of that IV, averaged across the groups of the other factor.

Model 4: A continuous covariate

When you have a covariate in the model the estimated marginal means will be adjusted for the covariate. Again, they’ll differ from observed means.

It works a little bit differently than it does with a factor. For a covariate, the estimated marginal mean is the mean of Y for each group of the IV at one specific value of the covariate.

By default in most software, this one specific value is the mean of the covariate. Therefore, you interpret the estimated marginal means of your IV as the mean of each group at the mean of the covariate.

This, of course, is the reason for including the covariate in the model–you want to see if your factor still has an effect, beyond the effect of the covariate. You are interested in the adjusted effects in both the overall F-test and in the means.

If you just use observed means and there was any association between the covariate and your IV, some of that mean difference would be driven by the covariate.

For example, say your IV is the type of math curriculum taught to first graders. There are two types. And say your covariate is child’s age, which is related to the outcome: math score.

It turns out that curriculum A has slightly older kids and a higher mean math score than curriculum B. Observed means for each curriculum will not account for the fact that the kids who received that curriculum were a little older. Marginal means will give you the mean math score for each group at the same age. In essence, it sets Age at a constant value before calculating the mean for each curriculum. This gives you a fairer comparison between the two curricula.

But there is another advantage here. Although the default value of the covariate is its mean, you can change this default. This is especially helpful for interpreting interactions, where you can see the means for each group of the IV at both high and low values of the covariate.

In SPSS, you can change this default using syntax, but not through the menus.

For example, in this syntax, the EMMEANS statement reports the marginal means of Y at each level of the categorical variable X at the mean of the Covariate V.

UNIANOVA Y BY X WITH V

/INTERCEPT=INCLUDE

/EMMEANS=TABLES(X) WITH(V=MEAN)

/DESIGN=X V.

If instead, you wanted to evaluate the effect of X at a specific value of V, say 50, you can just change the EMMEANS statement to:

/EMMEANS=TABLES(X) WITH(V=50)

Another good reason to use syntax.