Outliers. There are as many opinions on what to do about them as there are causes for them.

But there is a lot of bad advice out there about what to do with outliers.

In this training, we’ll take a step back and explore how to think about outliers so you can make good decisions based on your data and model. You’ll learn the different types of outliers and methods for figuring out which type you have. You’ll also learn how to determine whether, and how, they’re influential, and what to do about it.

Note: This training is an exclusive benefit to members of the Statistically Speaking Membership Program and part of the Stat’s Amore Trainings Series. Each Stat’s Amore Training is approximately 90 minutes long.

About the Instructor

Karen Grace-Martin helps statistics practitioners gain an intuitive understanding of how statistics is applied to real data in research studies.

She has guided and trained researchers through their statistical analysis for over 15 years as a statistical consultant at Cornell University and through The Analysis Factor. She has master’s degrees in both applied statistics and social psychology and is an expert in SPSS and SAS.

Not a Member Yet?

It’s never too early to set yourself up for successful analysis with support and training from expert statisticians.

Just head over and sign up for Statistically Speaking.

You'll get access to this training webinar, 130+ other stats trainings, a pathway to work through the trainings that you need — plus the expert guidance you need to build statistical skill with live Q&A sessions and an ask-a-mentor forum.

After over 25 years of helping researchers hone their statistical skills to become better data analysts, I’ve had a few insights about what that process looks like.

The one thing you don’t need to become a great data analyst is some innate statistical genius. That kind of fixed mindset will undermine the growth in your statistical skills.

So to start your journey become a skilled and confident statistical analyst, you need: (more…)

You’ll be excited to hear we’re doing another Statistics Skills Accelerator for our Statistically Speaking members: Count Models.

Stats Skills Accelerators are structured events focused on an important topic. They feature Stat’s Amore Trainings in a suggested order, as well as  live Q&As specific to the Accelerator.

live Q&As specific to the Accelerator.

In August, our mentors will be running a new Accelerator. The first Q&A is August 6, 2025 at 3 pm ET, hosted by Jeff Meyer.

Count models are used when the outcome variable in a model or group comparison is a discrete count:

- Number of eggs in a clutch

- Number of days in intensive care

- Number of aggressive incidents in detention

Count models come in a few types, and any of these can also be used for rates:

- Poisson Regression is the simplest and is the basis for all the other models, but its assumptions are rarely met with real data.

- Negative Binomial regression adds an extra parameter to a Poisson regression measure the extra variance that often occurs in real data.

- Truncated count models work when the lowest values (often just zero) cannot occur. This happens when a count has to occur in order to be part of the population of interest.

- Zero inflated count models are used when there are more zeros than expected. For this model, some zeros could have been something else and others couldn’t.

- Hurdle models also work when there are more zeros than expected, but the process of having a zero is different. In these models, there is an actual “hurdle” one has to pass in order to have a non-zero count.

- Logistic regression, when your count is out of of maximum number.

In this accelerator, learn about the different types of count models, how to understand their results, how to apply them to rates, and how to choose among them.

Note: This training is an exclusive benefit to members of the Statistically Speaking Membership Program and is a combination of watching recorded trainings and live events.

(more…)

There are not a lot of statistical methods designed just for ordinal variables. (There are a few, though.)

But that doesn’t mean that you’re stuck with few options. There are more than you’d think. (more…)

In April and May, we’re doing something new: including in membership the workshop Interpreting (Even Tricky) Regression Coefficients with Karen Grace-Martin.

We’ll be releasing the first 3 of 6 modules in April and modules 4-6 in May and holding a special Q&A with Karen at the end of each month.

If you’ve ever wanted to know how to interpret your results or set up your model to get the information you needed, you’ll love this workshop.

Although it’s at Stage 2 and focuses entirely on linear models, everything applies to all sorts of regression models — logistic, multilevel, count models. All of them.

(more…)

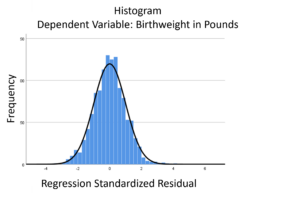

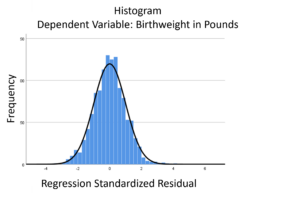

The linear model normality assumption, along with constant variance assumption, is quite robust to departures. That means that even if the  assumptions aren’t met perfectly, the resulting p-values and confidence intervals will still be reasonable estimates.

assumptions aren’t met perfectly, the resulting p-values and confidence intervals will still be reasonable estimates.

This is great because it gives you a bit of leeway to run linear models, which are intuitive and (relatively) straightforward. This is true for both linear regression and ANOVA.

You do need to check the assumptions anyway, though. You can’t just claim robustness and not check. Why? Because some departures are so far off that the p-values and confidence intervals become inaccurate. And in many cases there are remedial measures you can take to turn non-normal residuals into normal ones.

But sometimes you can’t.

Sometimes it’s because the dependent variable just isn’t appropriate for a linear model. The (more…)