classes, and colleagues and journal reviews question your results because of it. But there are really only a few causes of multicollinearity. Let’s explore them.Multicollinearity is simply redundancy in the information contained in predictor variables. If the redundancy is moderate, it only affects the interpretation of regression coefficients. But if it is severe-at or near perfect redundancy, it causes the model to “blow up.” (And yes, that’s a technical term).

classes, and colleagues and journal reviews question your results because of it. But there are really only a few causes of multicollinearity. Let’s explore them.Multicollinearity is simply redundancy in the information contained in predictor variables. If the redundancy is moderate, it only affects the interpretation of regression coefficients. But if it is severe-at or near perfect redundancy, it causes the model to “blow up.” (And yes, that’s a technical term).

But the reality is that there are only a handful of situations that cause multicollinearity. And three of them have very simple solutions.

These are:

1. Improper dummy coding

When you change a categorical variable into dummy variables, you will have one fewer dummy variable than you had categories.

Two categories requires one 1/0 dummy variable. Three categories requires two 1/0 dummy variables. And so on.

You don’t need a dummy variable in the model for the last category (the reference category) because the last category is already indicated by having a 0 on all other dummy variables.

Including the last category just adds redundant information. The result is multicollinearity. So always check your dummy coding if it seems you’ve got a multicollinearity problem.

2. Including a predictor that is computed from other predictors

Sometimes a set of variables includes both parts and a whole.

For example, I once had a client who was trying to test if larger birds had higher probability of finding a mate. This bird had a special tail, and he wondered if the size of the whole bird or the tail was more helpful to the bird in finding a mate. To compare them, he put three measures of size into the model: Body length, tail length, and total length of bird. Total length was the sum of the first two.

The model blew up due to perfect multicollinearity. Include two, but not all three.

I’ve also seen this in other parts & whole situations, like having both a Total Score on some scale as well as subscale scores. Since those subscale scores add up to the total, you can’t include them all.

3. Using nearly the same variable twice

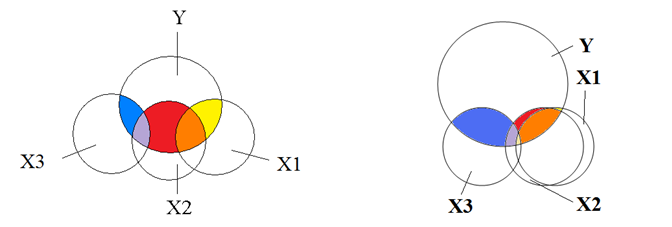

A related situation occurs when two measures of the same general concept are included in a model.

Sometimes researchers want to see which predicts an outcome better. For example, does personal income or household income predict stress level better? The problem is, very often these two variables are so related they’re redundant. You cannot put them into the model together and get good estimates of their unique relationship to Y.

There are a few different ways you can approach this that can all work.

One option: if they are both just measuring income, combine them into a single income variable. Depending on the types of variables you have, their distributions, and how many you have, one good way of doing this is to combine them with Principal Components Analysis. It won’t help you answer the question of which is more predictive, but it will allow you to incorporate information from all the variables as predictors.

Another option: if you really want to answer that question of which is more predictive, use a predictive model building technique to decide which one should go into the model. The most predictive model will go first. Options include LASSO and CART.

Detecting Multicollinearity from other Causes

One of the nice things about these three causes of multicollinearity is that they’re pretty obvious. The model blows up and you can find one of these pretty easily.

Another situation that creates multicollinearity is harder to see, though. And that’s when two or more predictors are highly correlated. Here’s another article on Eight Ways to Detect Multicollinearity.

Thanks for ably explaining the common causes of multicollinearity in linear regression analysis.